What is Caching

Lets have a brief idea of cache…..

A cache is hardware or software that stores data that can be retrieved faster than other data sources. its help to process the user queries faster.

In caching it has 2 difference levels.

- Client-side caching

- Server-side caching

Client-Side Caching

In client side we use angular as a frontend tool as an example, and think we input some values to the system and need a calculation of it. in the beginning what was done is system bring our input values of A,B,C and go to the Database and get X,Y,Z values and do the calculation as (C) finally it brings to the user. lets think it will take around 3 seconds.

Then another user come and do the same process but he will spend 1 second or less than to get the answer… but how???

That is the mechanism of caching. Why previous answer was stored in the cache so it can be access pretty quick.

Server-Side Caching

Read Cache

Its works like this think there were 3 parameters called ID, Username, Userphone and when the very first user comes to the system and he will request this data then this data request went to the database and give the response to the user but it spending 5 min (assume value). when its happen in that moment the value will stored in the sever cache also then another user will request that data withing the session time he got the data less than 2 seconds. that was the server-side read caching.

Write Cache

when we talk about write caching main thing is the database, because all the data stored in there, database resources are very expensive and it has limited connection pool as an example lets think 200 connection. If its fine when the front end requests only 200 write requests, but what happened it requests more than 200 at the same time,

In this situation front end write the requests in the cache and the cache and the database has a middle man called cache processors they read from the cache and write to the database. this process called write-back caching.

But you need to aware of this caching because when it breaks the data will gone so that you need to have distributing caching or something like that.

There had another method called write-through cache which means when you write through the cache at the same time you write to the database also. This will be the superfast method.

What is Distributed Cache Cluster and its benefits

before going to the topic lets take an example:

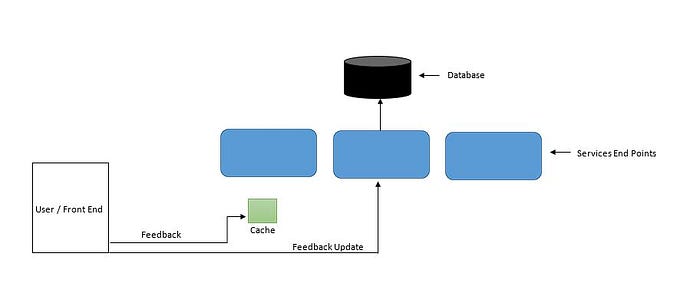

In this example you can see the user send a feedback but that feedback stored in the cache and not updated to the database yet. after few seconds user need to update that feedback and he update it, so in this time that update was done with the second services endpoint without caching and it will update the database. but the problem is the very first feedback is still in the cache and its ready to update the database. this is an issue thats called cache creating problem.

Lets looking for a solution for this, yes Distributed Cache Cluster is the solution for this but how ok lets see,

the distributed cache cluster is centralized so that every service point and the front end updates are sync with that so that we re safe because when ever service point hit it also read the same cluster cache.